In the fast-paced world of technology, staying ahead means continually evolving. For developers, this evolution is often reflected in their CVs, which need to be constantly updated with the latest skills and project experiences. Manually keeping CVs current can be a tedious task, but what if we could automate it? Let’s dive into a story of how we achieved this with Python, Elasticsearch, and some clever AI.

A Journey into Automation

Imagine a bustling tech company, always on the move with new projects and innovative ideas. The HR team is swamped, trying to keep up with the rapid pace of change. Every developer’s CV needs to be a living document, reflecting their latest and greatest accomplishments. Moreover, when new projects come up requiring specific skills, it's crucial to quickly identify which developers are the best fit. This is where our automation journey begins.

Step-by-Step Process

- Retrieve Data from Elasticsearch: We fetch project descriptions and developer details, stored as embedded vectors, from an Elasticsearch index.

- Cluster Data with KMeans: We organize the data into clusters based on these embedded vectors using KMeans clustering.

- Extract Skill Sets: We use a custom text processing module interfacing with an OpenAI model to identify key skills from project descriptions.

- Build the CV: We format the extracted skill sets and project details into a comprehensive CV template.

- Insert Data into the Database: Finally, we store the newly built CV and skill sets into a database using SQLAlchemy.

Step 1: Retrieving Data from Elasticsearch

Our first challenge was to gather all the necessary data. The developers’ experiences and project descriptions were stored in Elasticsearch, but not in their raw form. Instead, we stored embedded vectors of the content, enabling efficient retrieval and processing. With Python, Elasticsearch, and AWS credentials in hand, we could efficiently pull large datasets in batches.

from elasticsearch import Elasticsearch, RequestsHttpConnection

from requests_aws4auth import AWS4Auth

import boto3

credentials = boto3.Session(aws_access_key_id=ACCESS_KEY_ID, aws_secret_access_key=ACCESS_KEY).get_credentials()

awsauth = AWS4Auth(credentials.access_key, credentials.secret_key, 'us-east-1', 'es')

es = Elasticsearch(

hosts=[{'host': 'your-elasticsearch-domain', 'port': 443}],

http_auth=awsauth,

use_ssl=True,

verify_certs=True,

connection_class=RequestsHttpConnection

)

def get_all_documents(index_name):

documents = []

batch_size = 1000

response = es.search(index=index_name, body={"size": batch_size, "query": {"match_all": {}}}, scroll="1m")

documents.extend([hit['_source'] for hit in response['hits']['hits']])

scroll_id = response['_scroll_id']

while True:

response = es.scroll(scroll_id=scroll_id, scroll="5m")

if len(response['hits']['hits']) == 0:

break

documents.extend([hit['_source'] for hit in response['hits']['hits']])

return documents

Step 2: Clustering Data with KMeans

With the data retrieved, the next step was to make sense of it. We decided to cluster the developers based on their embedded vectors using KMeans clustering. After some experimentation, we found that 5 clusters worked best. This allowed us to categorize developers efficiently and understand the various skill sets within the team.

from sklearn.cluster import KMeans

import pandas as pd

# Assume df is your DataFrame with relevant features

optimal_clusters = 5

kmeans = KMeans(n_clusters=optimal_clusters, random_state=42)

df['cluster'] = kmeans.fit_predict(df[['embedded_vector']])

Step 3: Extracting Skill Sets

The magic of AI comes into play when extracting skill sets. We used a custom text processing module that interfaces with an OpenAI model to extract relevant skills from the project descriptions. This involved building prompts and letting the AI do what it does best – identify patterns and extract meaningful information.

from RetrieveOpenAIAnswer import RetrieveOpenAIAnswer

text_processor = RetrieveOpenAIAnswer()

def build_prompt(project_desc, selected_items):

prompt = f"Extract skills from the following project description: {project_desc}\n"

for item in selected_items:

prompt += f"- {item}\n"

return prompt

initial_prompt = build_prompt(project_desc, selected_items)

skill_set_parsed = text_processor.get_answer(prompt=initial_prompt, model='gpt-4-0125-preview')

Step 4: Building the CV

With a treasure trove of skills extracted, it was time to build the CVs. This step involved taking the extracted skill sets and formatting them into a structured document, along with the developer’s details and project descriptions. The result was a comprehensive CV that was not only up-to-date but also reflective of the developer's latest accomplishments.

def build_new_cv_template(skill_set, employee_name, employee_email, employee_title, project_description):

cv_template = f"""

Curriculum Vitae

----------------

Name: {employee_name}

Email: {employee_email}

Title: {employee_title}

Skills:

{', '.join(skill_set)}

Project Descriptions:

{project_description}

"""

return cv_template

new_cv = build_new_cv_template(skill_set_parsed, employee_name, employee_email, employee_title, project_description)

Step 5: Inserting Data into the Database

Finally, the last leg of our journey involved storing these CVs and skill sets into a database. This step ensures that the CVs and skill sets are readily available for future retrieval and analysis.

Results After Automation

Let's take a look at the impact of our automation process with a real-life example.

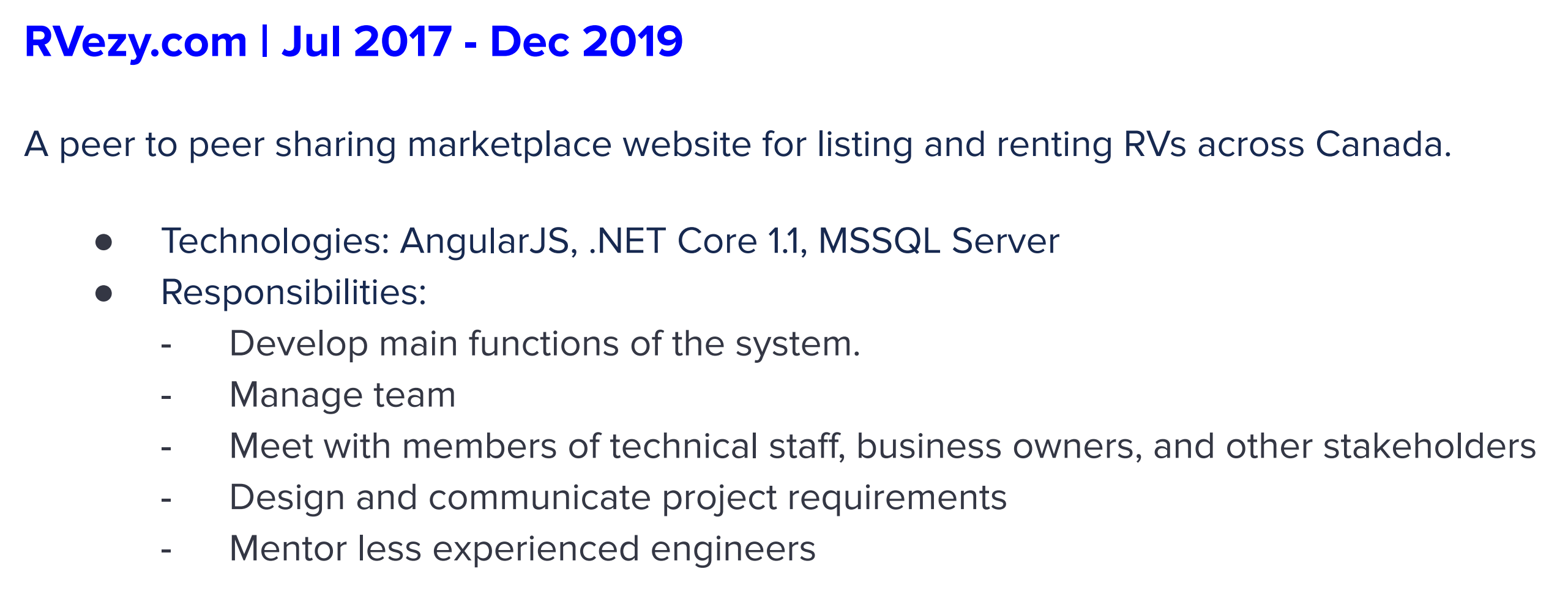

Here is a section of a CV of one of our tech leads who has been working with us for over 10 years:

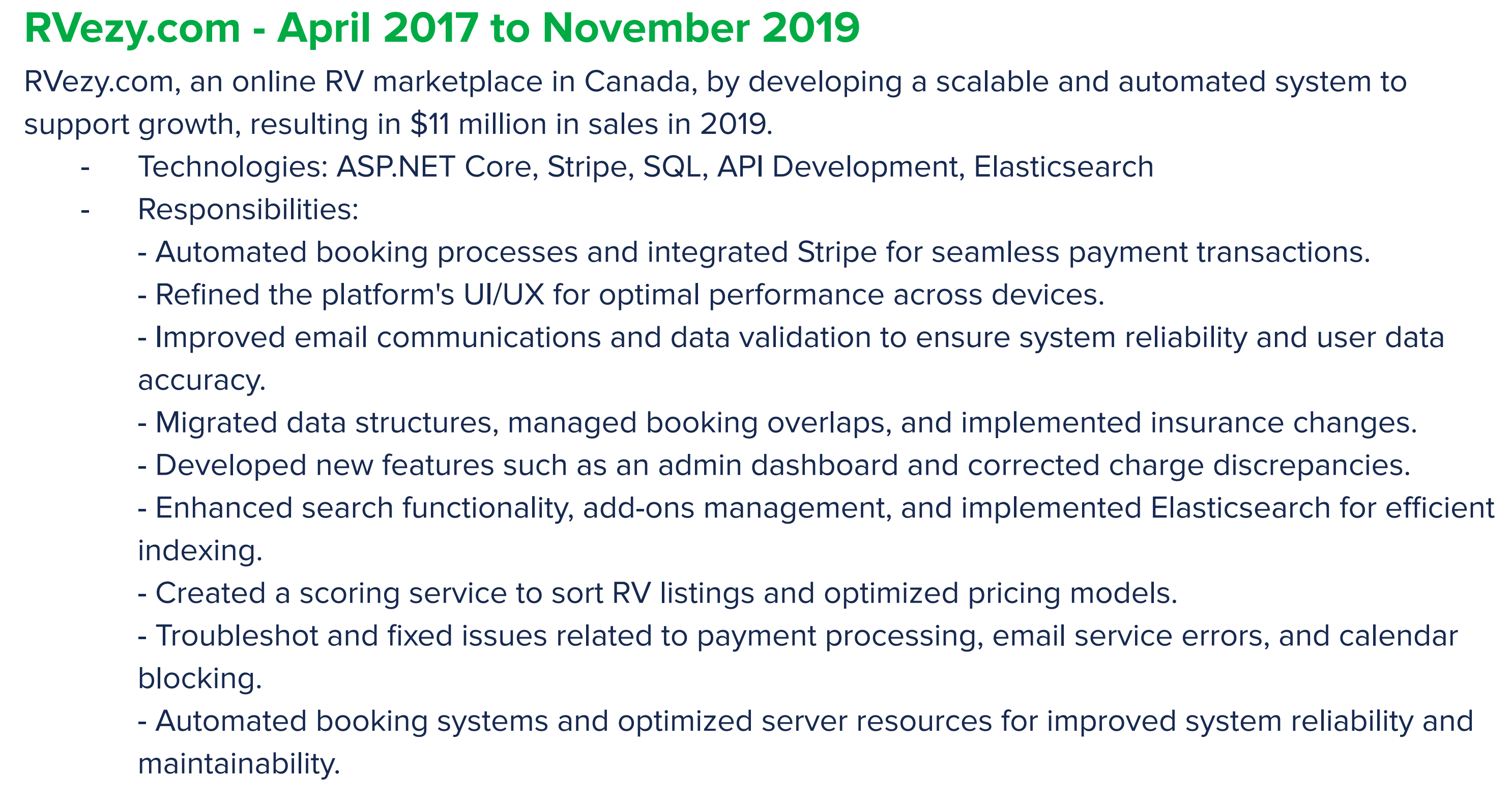

After running the automation, it is updated to:

Here are the skillsets that were in the old CV:

And here are the skillsets after the automation:

We don't send these detailed CVs to our customers directly. Instead, we summarize them to make them more concise and focused. However, having these detailed CVs internally helps us quickly match developers with new projects based on their comprehensive skill sets. This also allows us to provide proof to our customers that our developers have the necessary experience and expertise.

Conclusion

By leveraging Python, Elasticsearch, KMeans clustering, and OpenAI's powerful models, we transformed a labor-intensive task into a seamless, automated process. This approach not only saves time but also ensures that CVs are up-to-date with the latest skills and project experiences. As the tech industry continues to evolve, such automation will become increasingly vital in managing and showcasing talent effectively. Moreover, having a detailed view of developers' skill sets allows us to quickly match them with new projects, ensuring that the right people are assigned to the right tasks. Through experimentation, we determined that 5 clusters are optimal, but you can use methods like the Elbow Method or Silhouette Score to determine the most optimal number of clusters for your specific dataset. Additionally, storing the embedded vectors of the content in Elasticsearch enhances the efficiency and accuracy of the retrieval and processing stages.

And thus, our tech company was able to keep pace with its rapid growth, ensuring that each developer’s CV told the story of their continuous evolution and ever-expanding expertise, ready to take on new challenges at a moment's notice.